Sparsity: Graphs, Structures, and Algorithms

Free download. Book file PDF easily for everyone and every device. You can download and read online Sparsity: Graphs, Structures, and Algorithms file PDF Book only if you are registered here. And also you can download or read online all Book PDF file that related with Sparsity: Graphs, Structures, and Algorithms book. Happy reading Sparsity: Graphs, Structures, and Algorithms Bookeveryone. Download file Free Book PDF Sparsity: Graphs, Structures, and Algorithms at Complete PDF Library. This Book have some digital formats such us :paperbook, ebook, kindle, epub, fb2 and another formats. Here is The CompletePDF Book Library. It's free to register here to get Book file PDF Sparsity: Graphs, Structures, and Algorithms Pocket Guide.

Contents:

- Terminal Freeze!

- Englishness and Empire 1939-1965!

- Navigation menu;

- Caste Wars: The Philosophy of Discrimination (Studies in Ethics and Moral Theory)!

It seems likely that these problems will be amenable to algorithms based on randomized projections that dramatically reduce the effective dimensionality of the underlying problems. Such techniques has recently proven highly effective for the related problems of how to find approximate lists of nearest neighbors for clouds of points in high dimensional spaces, and for constructing approximate low-rank factorizations of large matrices. In both cases, a key observation is that the problem of distortions of distances that is inherent to Abstract The study of computational problems on graphs has long been a central area of research in computer science.

- Upcoming Events;

- Conversations on the Dark Secrets of Physics!

- Transportation Planning!

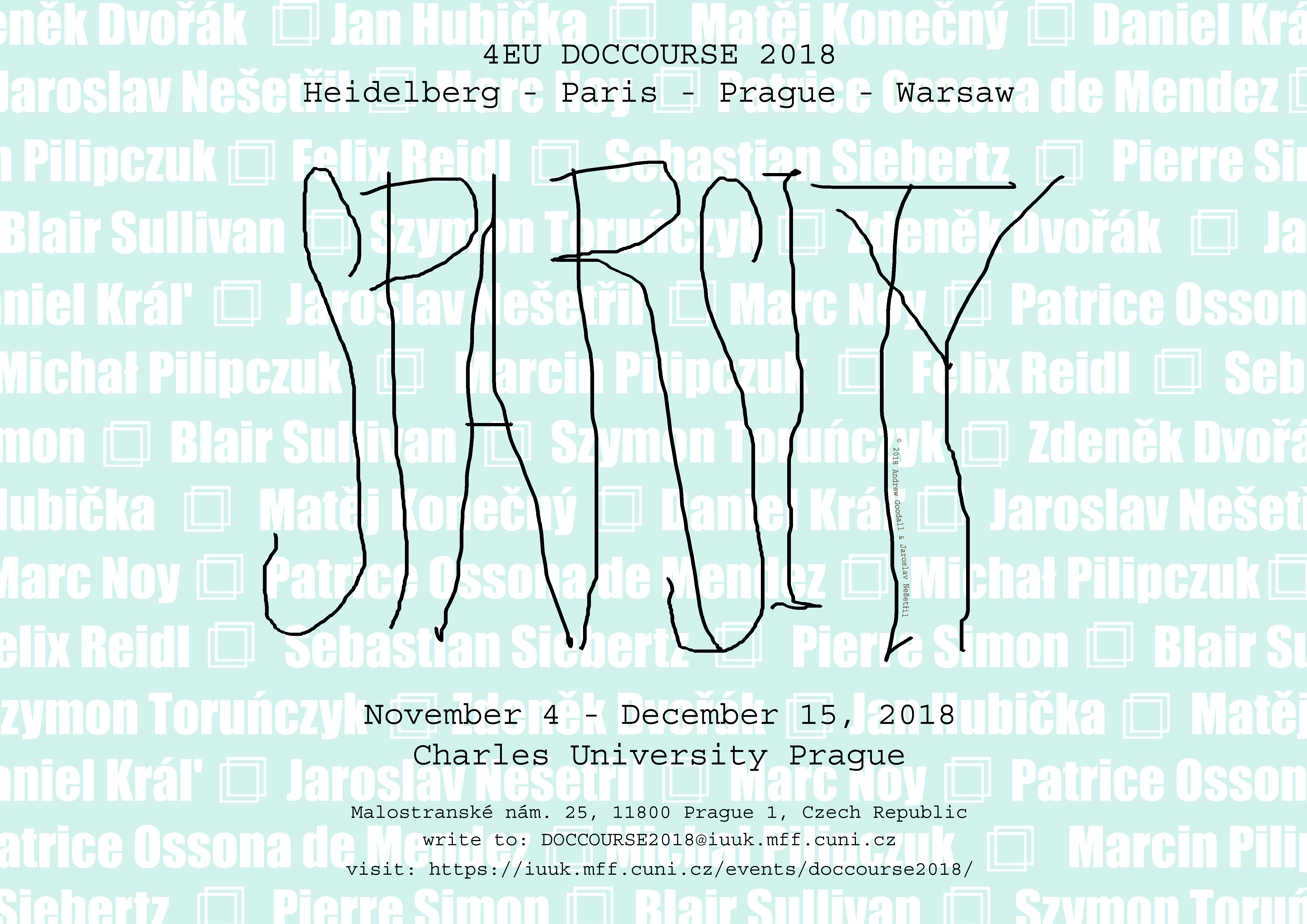

- Patrice Ossona De Mendez (Author of Sparsity).

- A Delusion of Satan: The Full Story of the Salem Witch Trials!

- Sparsity in Algorithms, Combinatorics and Logic?

- Architectural Management: International Research and Practice!

Image courtesy of Eli Upfal. Speaker Poster Presenter Attendee.

Semester Schedule Monday, February 3, Tuesday, February 4, Wednesday, February 5, Thursday, February 6, Friday, February 7, Monday, February 10, Tuesday, February 11, Wednesday, February 12, Thursday, February 13, Friday, February 14, Monday, February 17, Tuesday, February 18, Wednesday, February 19, Thursday, February 20, Friday, February 21, Monday, February 24, Tuesday, February 25, Wednesday, February 26, Thursday, February 27, Friday, February 28, Monday, March 3, Tuesday, March 4, Wednesday, March 5, Thursday, March 6, Friday, March 7, Monday, March 10, Tuesday, March 11, Wednesday, March 12, Thursday, March 13, Friday, March 14, Monday, March 17, Tuesday, March 18, Wednesday, March 19, Thursday, March 20, Friday, March 21, Monday, March 24, Tuesday, March 25, Wednesday, March 26, Thursday, March 27, Friday, March 28, Monday, March 31, Tuesday, April 1, Wednesday, April 2, Thursday, April 3, Friday, April 4, Monday, April 7, Tuesday, April 8, Wednesday, April 9, Thursday, April 10, Friday, April 11, Monday, April 14, Tuesday, April 15, Wednesday, April 16, Thursday, April 17, Friday, April 18, Monday, April 21, Tuesday, April 22, Wednesday, April 23, Thursday, April 24, Friday, April 25, Monday, April 28, Tuesday, April 29, Wednesday, April 30, Thursday, May 1, Friday, May 2, Monday, May 5, Tuesday, May 6, Wednesday, May 7, Thursday, May 8, Friday, May 9, The sparsity of input can be used in a variety of ways, e.

This motivates a theoretical study of the abilities and limitations of sparsity-based methods. However, a priori it is not clear how to even define sparsity formally.

- Etude No. 22 in E major - From 24 Etudes Op. 48!

- Naked Lens: Beat Cinema!

- Your Answer;

Multiple sparsity-oriented paradigms have been studied in the literature, e. However, many of those paradigms suffer from being either too restrictive to model real-life applications, or too general to yield strong tractability results.

The central notions of their framework are bounded expansion and nowhere dense classes. It quickly turned out that the proposed notions can be used to build a mathematical theory of sparse graphs that offers a wealth of tools, leading to new techniques and powerful results. This theory has been extensively developed in the recent years.

This defines what data type is provided by the srcEdgeData and dstEdgeData parameters. However, the majority of these methods require the sampling patterns in training, which limits their application to a specific problem class. Function nvgraphDestroyGraphDescr. This function sets index of edge data where mask for edges will be stored. The eigenvectors are stored in row-major order and normalized by row and by column. In the case of highly sparse graphs we can leverage sparse libraries, and an even faster solution for both dense and sparse graph is to perform the computations on a GPU.

It is particularly remarkable that the concepts of classes of bounded expansion and nowhere dense classes can be connected to fundamental ideas from multiple other fields of computer science, often in a surprising way, providing several complementary viewpoints on the subject. On one hand, foundations of the area are grounded in structural graph theory, which aims at describing structure in graphs through various decompositions and auxiliary parameters.

On the other hand, nowhere denseness seems to delimit the border of algorithmic tractability of first-order logic, providing a link to finite model theory and its computational aspects. Finally, there is a fruitful transfer of ideas to and from the field of algorithm design: sparsity-based methods can be used to design new, efficient algorithms, especially in the paradigms of parameterized complexity, approximation algorithms, and distributed computing.